AI Builder Survey

On our AI podcast Unsupervised Learning, we’ve had great conversations on the lessons cutting-edge builders are learning deploying generative AI applications. To augment these episodes we wanted to conduct a more formal survey with 40+ leaders at organizations building AI products (both traditional tech companies and startups with over 50 employees) to better understand the ways they’re building applications and the tools they’re using. We wanted to see just how common certain trends we’ve observed are and what builders are expecting moving forward. Here were our top takeaways from the survey:

#1. OpenAI is still the clear fan-favorite with Claude rising in the ranks

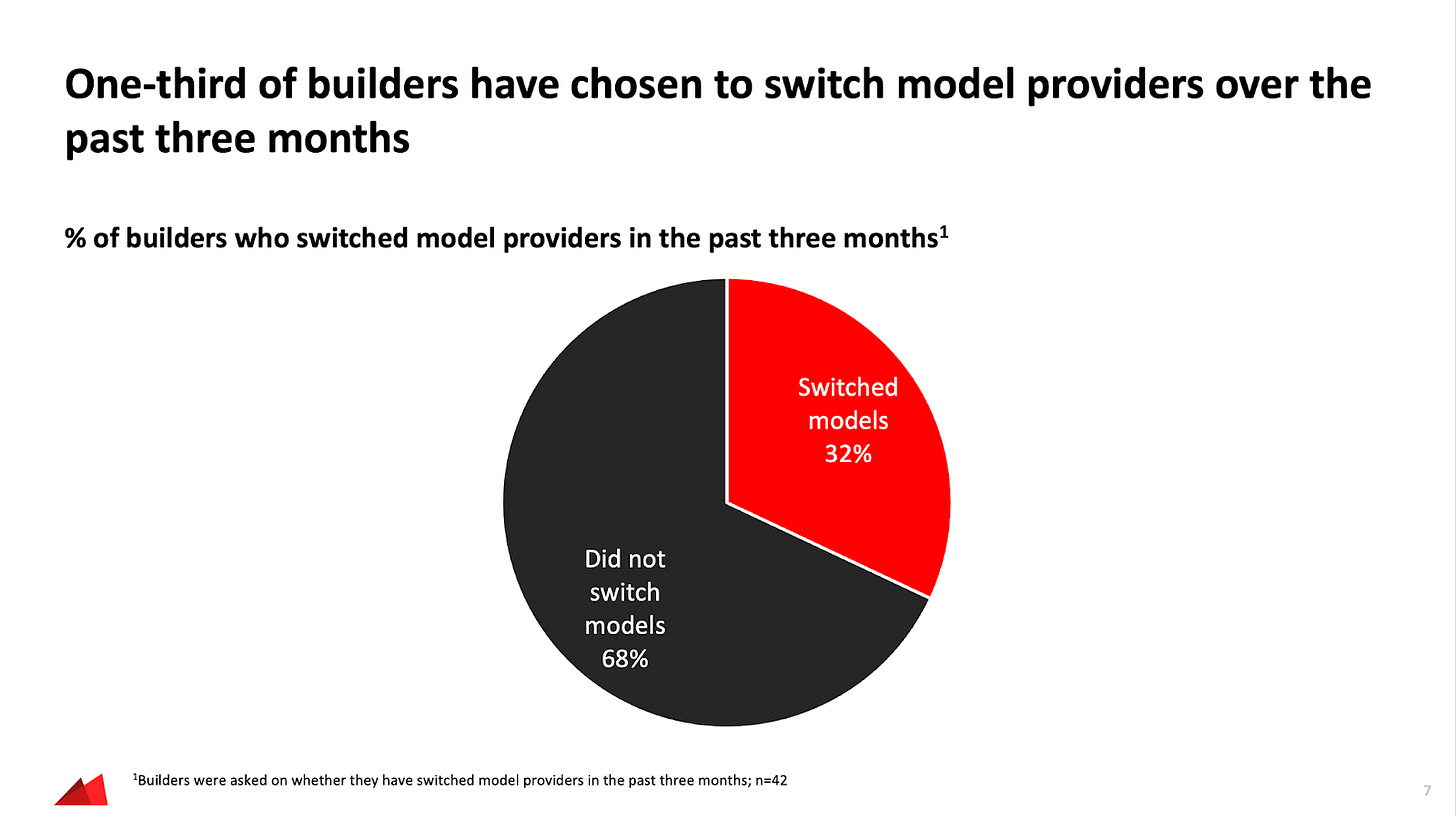

When we conducted this survey in June, OpenAI was still king. Of course in AI world, months feels like years and it’s likely that we’d see more usage of Anthropic if we reconducted the survey given the strength of their models. What was most interesting to us was the movement between models. About 1/3 of the builders we surveyed switched models in the last three months, searching for better performance or fit. The popularity of larger closed models seems to suggest that we’re still in a phase of discovering potential AI use cases, with cost optimization to come later after larger-scale deployments.

#2. More model expansion and switching expected on the way

Looking ahead, 2 in 5 builders anticipate switching or expanding their model providers within the next few months. The primary factors driving these decisions include the need for better performance, lower costs, speed, and enhanced data security.

In particular, smaller teams (less than 20 people working on AI) seemed more likely to switch or expand model providers compared to larger teams.

#3. RAG and fine-tuning models meet most builders’ needs

On Unsupervised Learning we’ve asked lots of builders for their best practices on fine-tuning and Retrieval-Augmented Generation (RAG). Many builders are using these techniques to improve model performance. Sixty-one percent of builders are utilizing fine-tuning to tailor models to their specific needs, while 56% are leveraging RAG to enhance the efficiency and relevance of their AI applications. This balance is expected to shift slightly in the future, with more builders leaning towards fine-tuning. This should prove particularly effective as companies generate data from user feedback on their deployments of these applications.

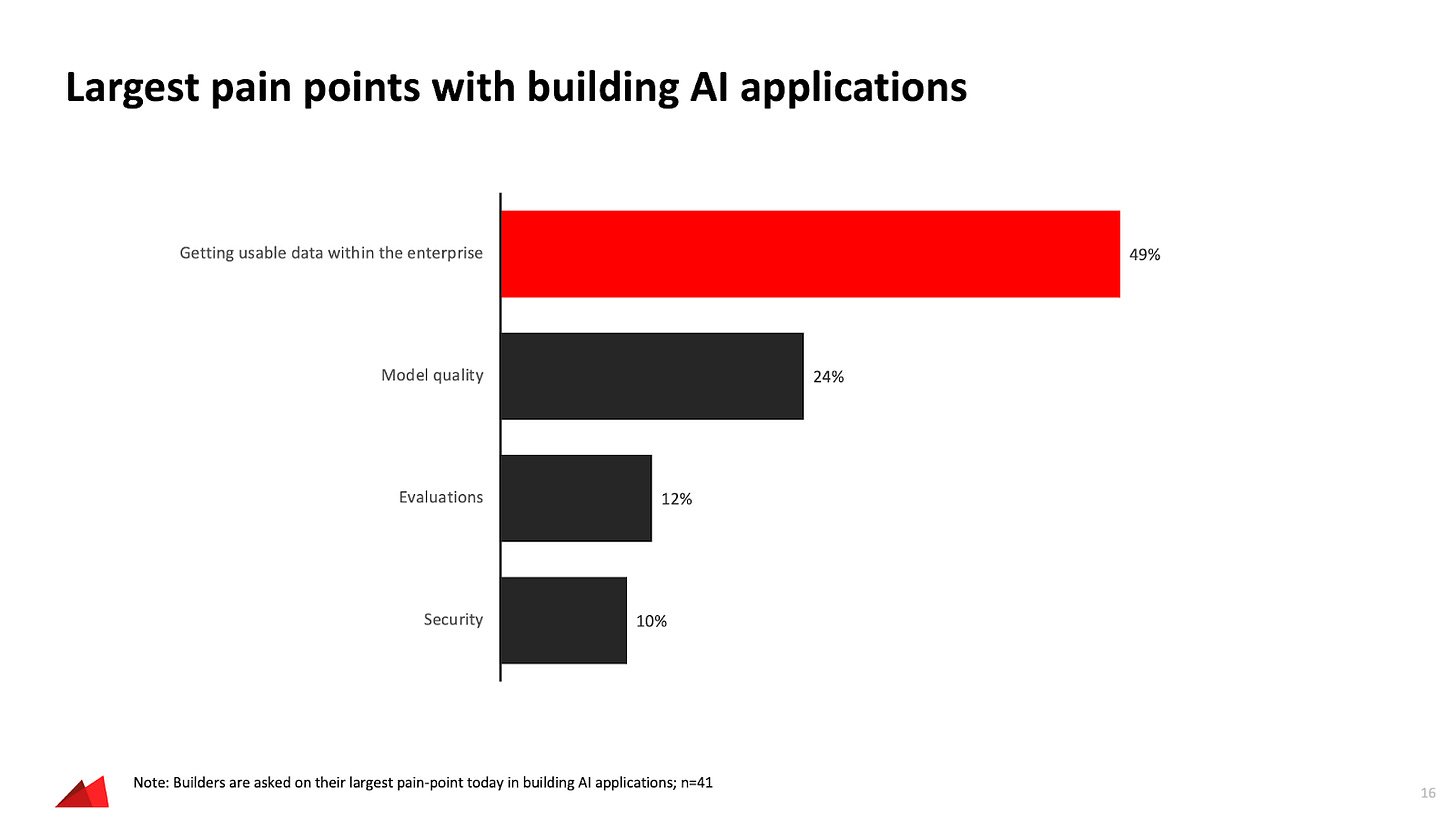

#4 Data remains the biggest painpoint in application development

The biggest headache for AI builders is getting usable data (49%). This is followed closely by concerns about model quality (24%) and the evaluation process (12%). Improving data management and having better evaluation tools are crucial for overcoming these hurdles.

#5. Internal use cases dominate

Our survey revealed that a significant majority of builders (93%) are developing internal use cases for generative AI, compared to 59% who are working on external applications. Given the difficulties in deploying applications externally that are non-deterministic, occasionally hallucinate and can be jailbroken by malicious actors, this isn’t entirely surprising. We’ve seen a plethora of use cases emerge internally that are high value despite LLMs’ shortcomings today including software development, search summarization, and knowledge management.

For external applications, the focus is primarily on chatbots and advanced search functionalities.

#6. Multi-modal use cases are on the rise

What about non-text based generative AI use cases? With the buzz around GPT-4o we asked builders how they are incorporating other modalities outside of text. Image ingestion and generation use cases led the way but audio is starting to increase (see our recent episodes with Suno CEO Mikey Shulman and Speak CEO Connor Zwick for more detail on audio-first applications). Video is the least commonly used modality today.

#7. Hosted inference solutions are more widely adopted among larger AI teams

Hosted inference solutions have taken the venture market by storm with many raising lots of money. We were also interested in how builders were thinking about these solutions and the most important factors in their selection.

Survey results indicate that hosted inference solutions are most prevalent among larger teams (56%) whereas only one-third of smaller teams (32%) opted to use inference solutions.

Cost and data security are top of mind for builders when choosing inference providers. A substantial 67% of respondents cite cost as a critical factor, followed closely by data security at 56%. These concerns are particularly pronounced among smaller teams, who are more sensitive to budget constraints and data privacy issues.

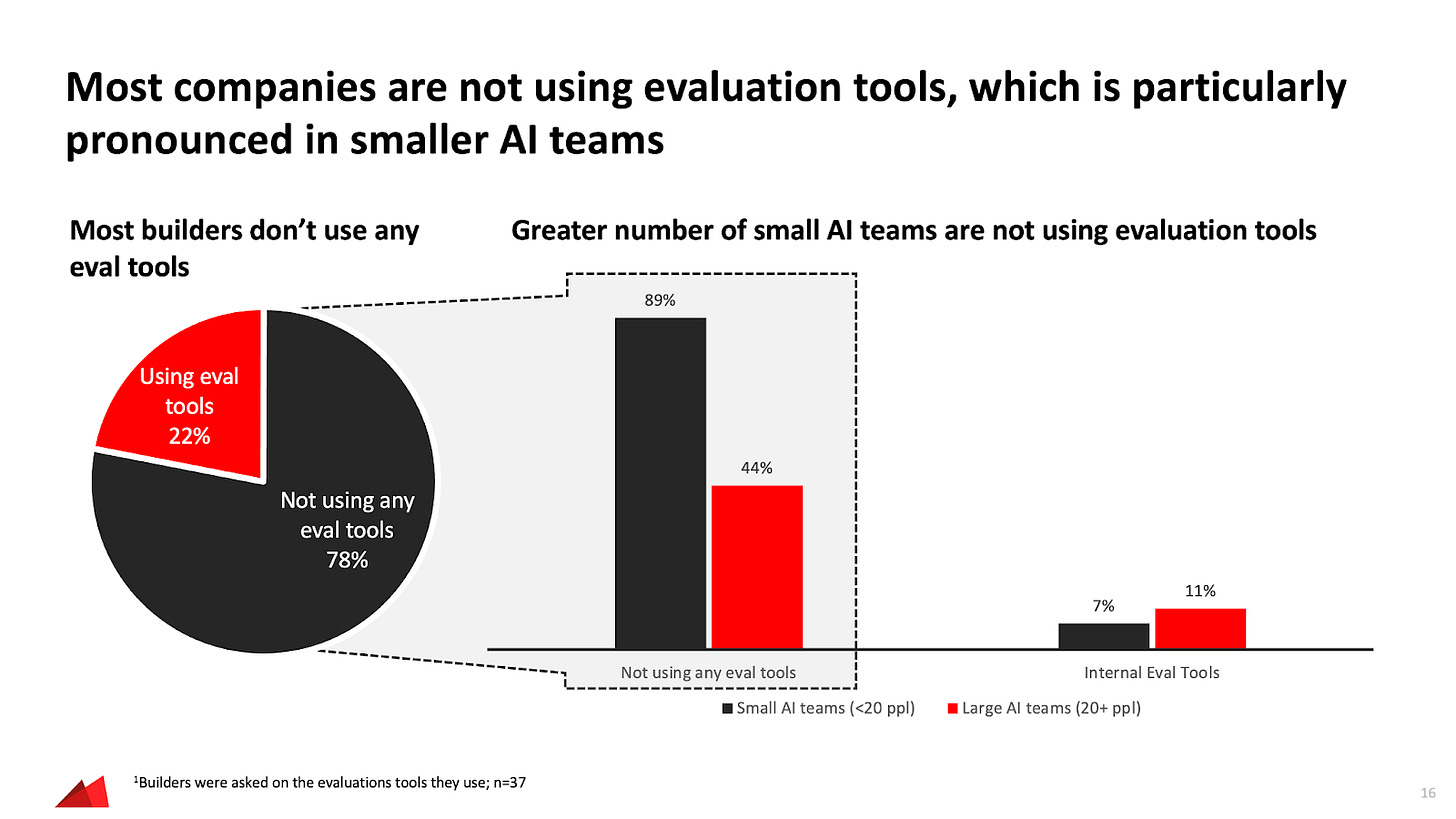

#8. Early innings for evaluation tools

Almost every builder we talk to on Unsupervised Learning is thinking about evaluation. Interestingly a majority of companies, particularly smaller AI teams, are not using evaluation tools. Another ~10% of respondents indicate that they’re using internal evaluation tools instead. This market feels ripe for disruption over the coming months.

#9. Half of companies are doing a hybrid of separate and embedded AI teams

One question we’ve explored a bunch on Unsupervised Learning is how companies are structuring their AI teams given the many different parts of organizations they touch. Nearly half of all AI builder teams are taking a hybrid approach of both embedding AI talent cross-functionally and having separate teams for common infrastructure.

But some companies have opted for a non-hybrid approach. Noticeably, one-third of builder respondents in larger teams (21+ builders) sit within completely separate AI teams.

Given how fast the landscape is changing, we’ll see very different results across many of these questions in mere months. We’ll continue to keep our listeners and readers updated on the trends we’re observing through our Unsupervised Learning podcast. Subscribe here for future episodes.

would love your thoughts on some of my stuff. follow me back, I could DM you?